ElasTest ambition

Software testing is one of the most complex and less understood areas of software engineering. When creating software, the testing process commonly accounts for the highest fraction of costs, efforts and time-to-market of the whole software development lifecycle. For example, in the testing book of Mili and Tchier it is reported that the creation of Windows Server 2003 required around 2,000 engineers for analysis and development but 2,400 for testing. This situation is getting worse with the emergence of highly distributed and interconnected heterogeneous environments (i.e. Systems in the Large, SiL) and the appropriate testing of SiL is becoming prohibitive for many software and service providers.

This is a deterrent of innovation and generates relevant risks in society given the pervasiveness of software in most human activities. This situation is mainly caused by complexity effects. When a software under test (SuT) grows in N lines of code, the complexity of its tests do not grow proportionally as these new N lines may generate potentially many new interactions. Hence, software grows linearly but its complexity may grow exponentially.

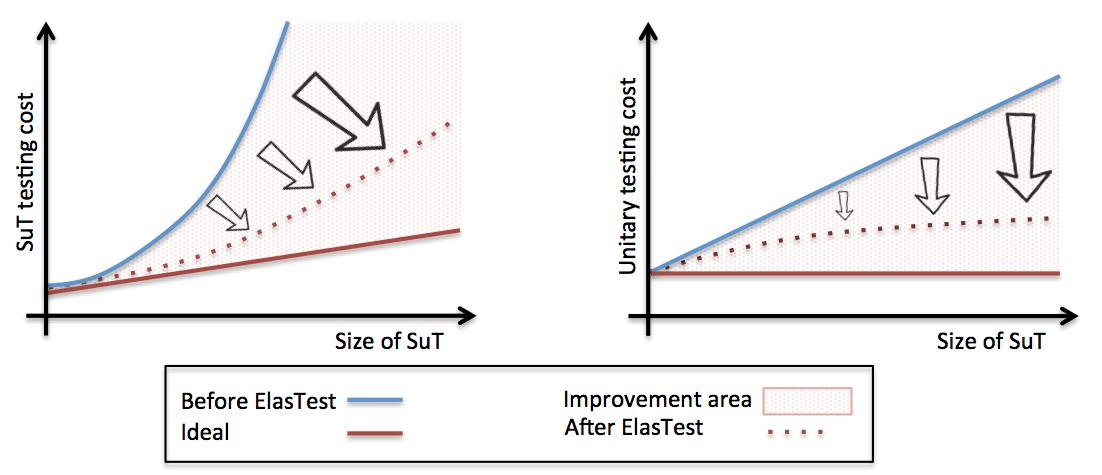

As introduced before, a SiL is an aggregation of systems in the small (SiS). Hence, following the same logic, when integrating a new SiS in a SiL the final testing costs are significantly over the ones of testing independently the comprising SiS. This effect is well known in software engineering and it is illustrated in the next figure.

Our assumption is that SiL grow through the aggregation of SiS that interact following some orchestration rules. Hence, we hypothesize that the appropriate orchestration of T-Jobs should also enable the validation of the interactions among such functions. As a result, in the ideal case (i.e. test orchestration effort being zero) the marginal cost of testing a new added function should be constant (red line on right picture) and the total costs of SiL testing should grow linearly (red line on left picture). In current state of the art (SotA), test orchestration technologies are not popular and are created in ad-hoc manners, reason why the interactions are typically tested manually driving to increasing marginal costs (blue line on right picture) and super-linear total costs (blue on the left).

As it can be observed in the previous figure, in an ideal world in whick SiS do not interact inside a SiL (solid red line), the testing costs might be growing linearly with the size of the SiL (left picture) and the marginal costs of testing would be constant (right picture). However, in reality (solid blue line) the costs of testing grow super-linearly (left picture) as the marginal cost of testing is not constant (right picture) due to the complex interactions within the SiL. In this context, our ambition is to approach as much as possible to that ideal situation basing on ElasTest ideas.

ElasTest ambition is to evolve SotA creating novel test orchestration technologies and to get as close as possible to such ideal.

ElasTest progresses beyond the state of the art

ElasTest will provide relevant advances beyond SotA both from a scientific and from an innovation perspective. The following table summarizes such advances.

| Competences | Current SotA | Expected SotA evolutions |

|---|---|---|

| Test orchestration |

No notion of orchestration Limited methods or tools for testing SiL Strong assumptions on tests and/or SuT Hard integration with CI tools |

Solid orchestration notation (topology) Systematic Divide-and-conquer testing of SiL No assumptions on tests or SuT Ready to be used with CI tools |

| Non-functional testing |

Non-functional testing is a challenge Poor tools and methodologies Ad-hoc non-reusable code required No tests for costs or energy No tools for multimedia QoE testing No tools for IoT QoS testing |

Non-functional testing is a feature Powerful tool and methodology Reusable orchestration logic Ready for costs and energy testing Ready for QoE multimedia testing Ready for QoS testing in IoT environments |

| Security testing |

Simple client/server configuration testing Specific to a vulnerability of application Limited protocol semantics Crash oracle |

Support for multiple entities Generic Security Check as a Service framework Fine-grained protocol grammar language Fine-grained oracles |

| GUI automation and impersonation |

GUI user impersonation Poor tools for code re-usability |

User + sensor + device impersonation Reusable SaaS test support services |

| Monitoring and Runtime verification |

Methodologies and tools for testing SiS Monitors are monolithic No mechanism for monitor coordination No mechanism for large fragmented logs |

Methodologies and tools for testing SiL Full orchestration of monitors Language for monitor orchestration Algorithms for large and fragmented logs |

| Machine Learning applications to testing |

Focus on automating processes Work on lab and controlled environments |

Focus on supporting the tester in:

Work on real-world SiL |

| Cognitive Q&A Systems |

Powerful tool for knowledge reusability, but never applied to software testing |

Q&A for designing T-Jobs Q&A for designing orchestration Q&A for test design strategy Full testing knowledge reusability |

| Instrumentation |

Only available in specific clouds May require special permissions Fragmented non-uniform APIs |

Infrastructure-independent capabilities Available for all cloud users Coherent unified and uniform API |