ERE with custom code

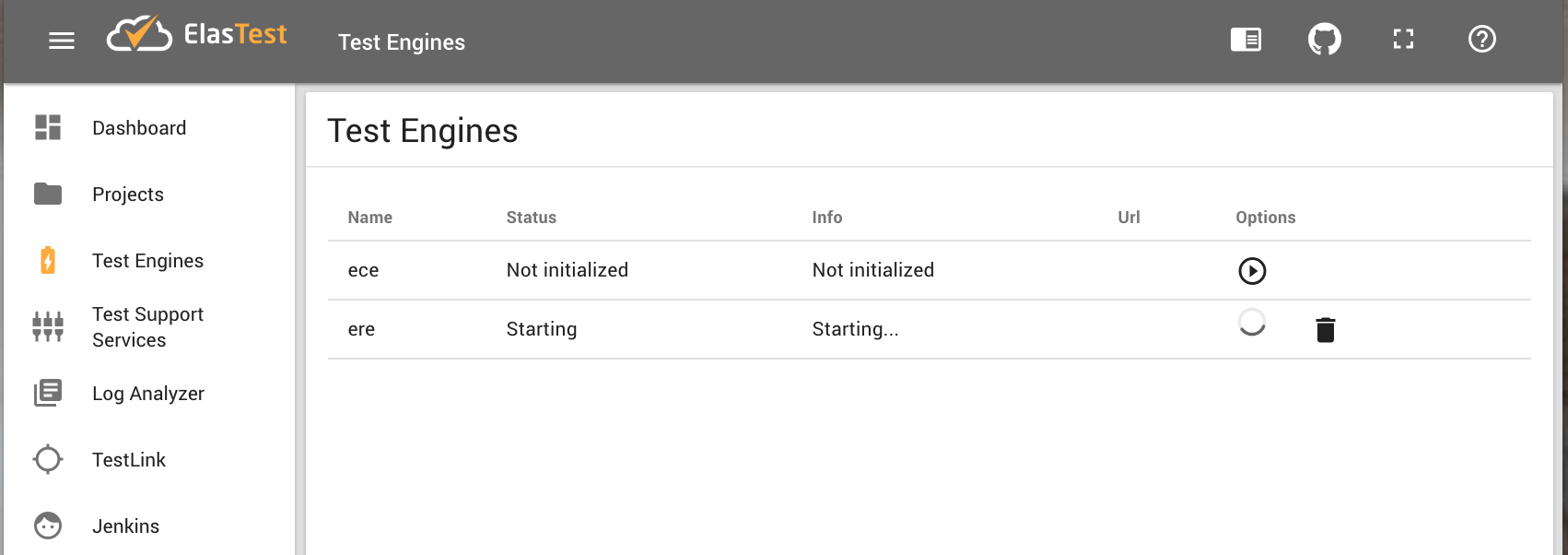

- Start ElasTest platform:

docker run --rm -v ~/.elastest:/data -v /var/run/docker.sock:/var/run/docker.sock --rm elastest/platform start -m=singlenode

- Use browser to access ElasTest Dashboard.

- Navigate to Test Engines page and start 'ere'.

Note: The first time you start ERE, you need to wait a couple of minutes for the image to get pulled from ElasTest repository. Once the image is present on your system, starting and stopping ERE is quick. The current size of /elastest/ere-trial image is 4.2 GB (it will be decreased in future releases).

- Click on eye icon to access ERE UI.

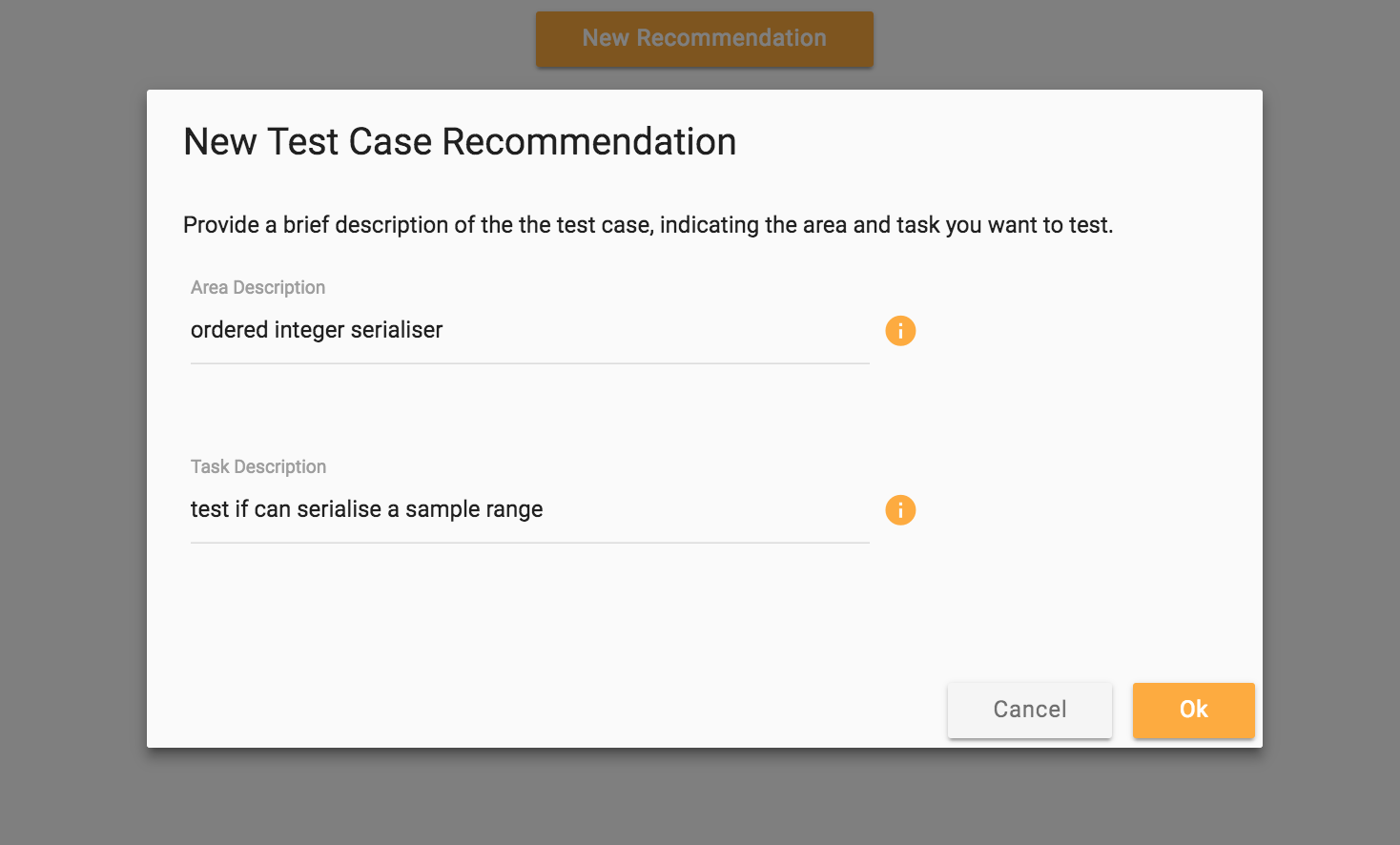

New Recommendation wizard

Click on 'New Recommendation' button in the main page to enter a description of a test case. In the 'Area Description' field, provide a more general description of the tested area, such as a description of functionality of the class under test. In the 'Task Description' field, indicate the specific task or functionality to be tested. Click OK to submit your query.

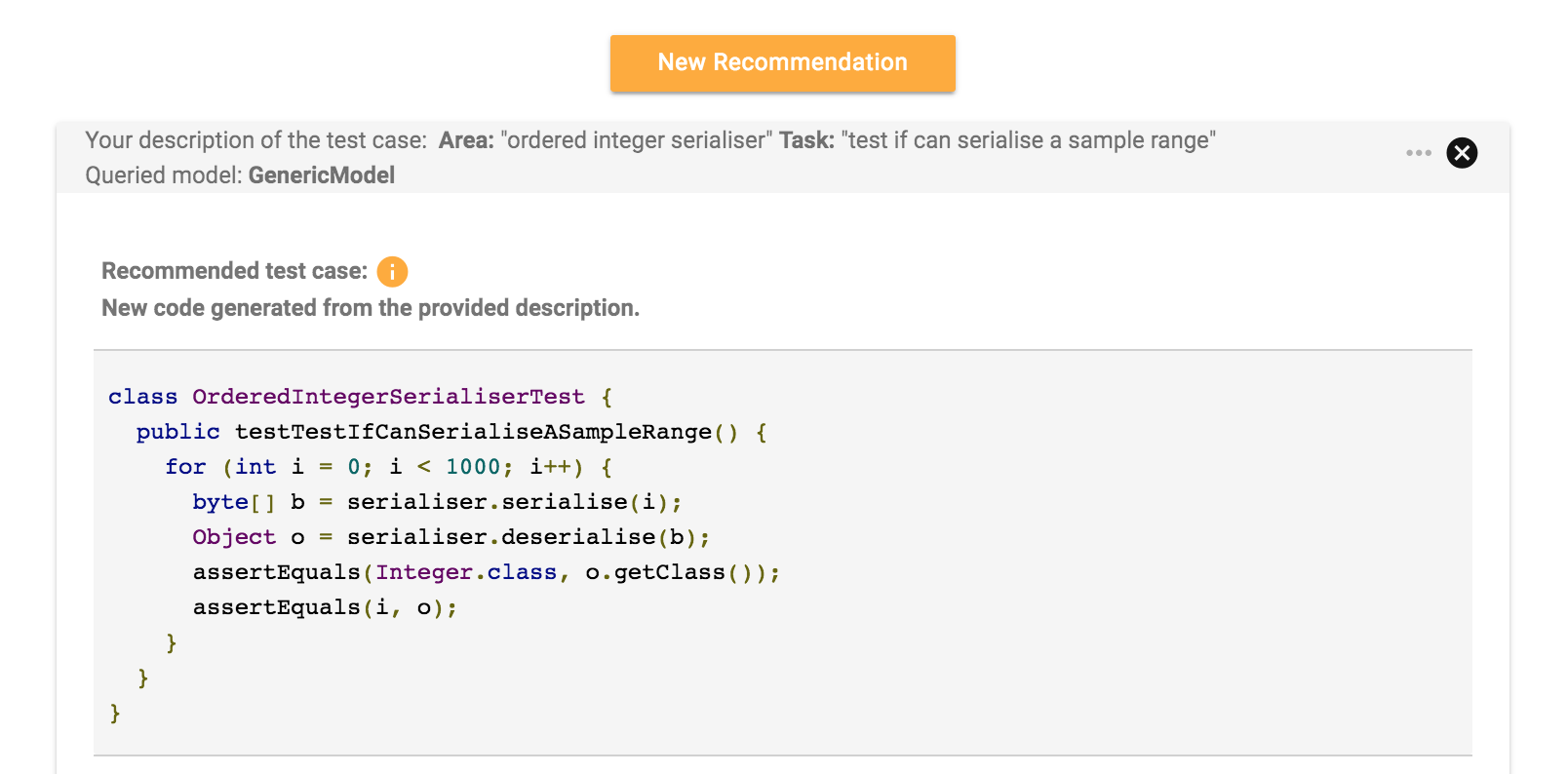

Results Page

For each query, ERE produces two types of recommendations: (1) new code generated from the area and task description, and (2) a list of existing test cases retrieved from the software repository based on their similarity to the generated test case.

Generated code

The new code inferred by the machine learning model is checked for syntax. If it complies to basic Java syntax rules, it is displayed on the top of the page (embedded into a mock class), otherwise it is skipped.

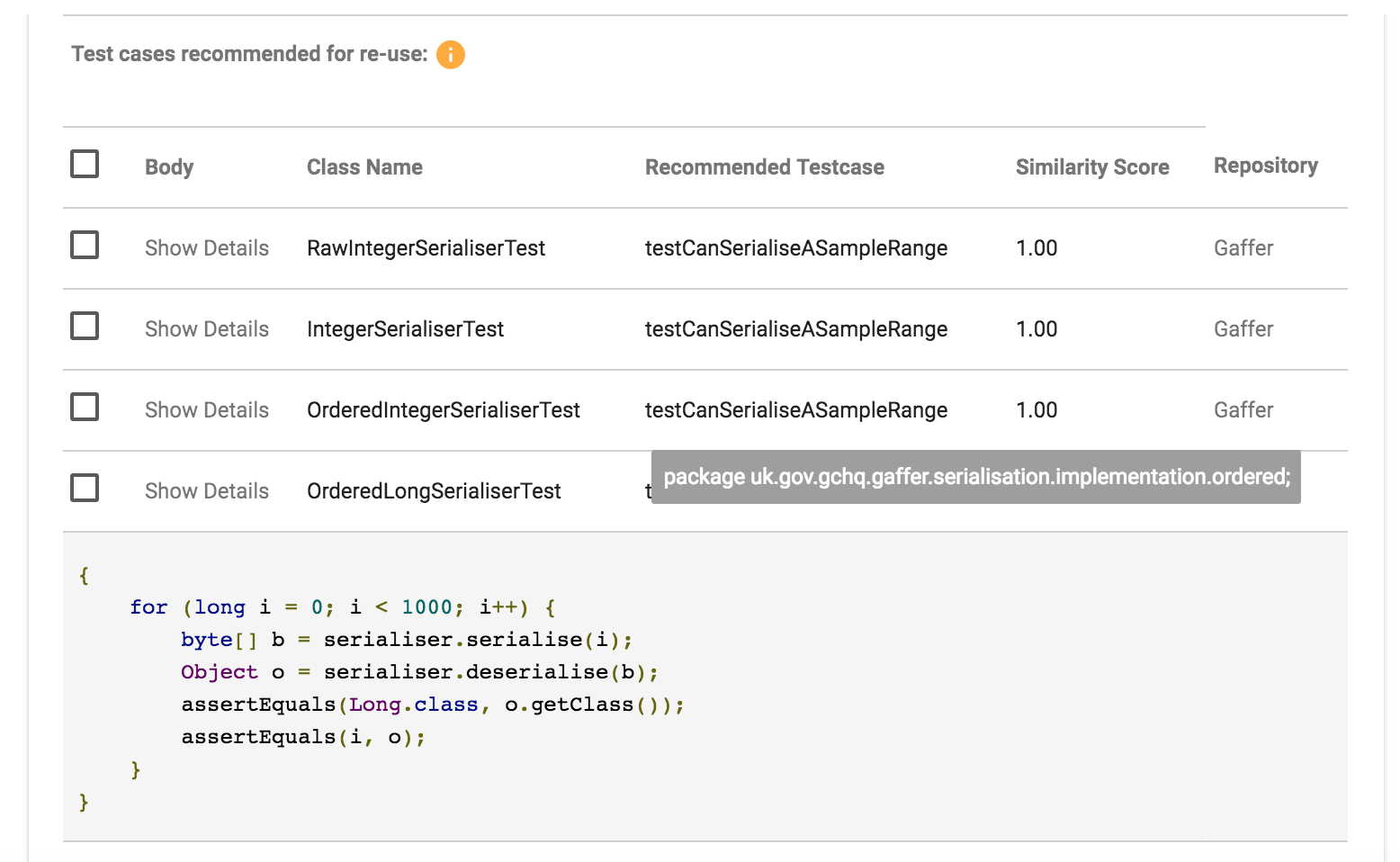

Test cases for reuse

The list of existing test cases recommended for re-use is displayed in a table. The table provides information that helps to locate the reusable test cases in the repository. Each row includes the name of the class containing the recommended test method and the name of that method, as well as the score indicating the degree of similarity to the generated code.

Click on 'Show Details' link to display the body of the method.

Hover over a class name to view package name.

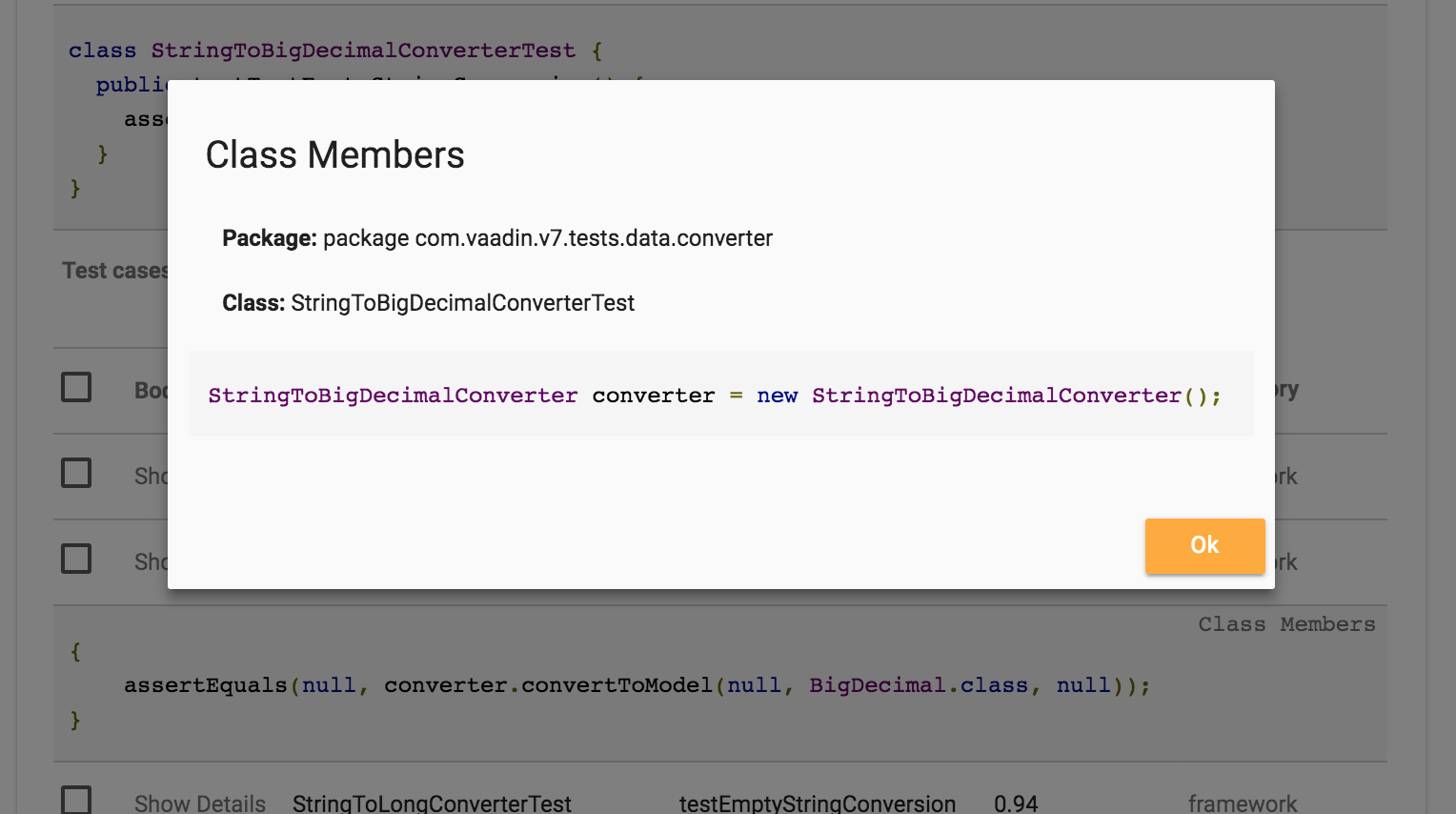

Click on the 'Class Members link (if available) to view the members of the class containing the recommended test case.

Click on the 'Repository' link to open the repository of origin and inspect the context of the recommended test case.

[1] Luong, T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-based Neural Machine Translation. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1412–1421.