European Commission Project

ElasTest Platform is being developed within a public founded project called "ElasTest: an elastic platform for testing complex distributed large software systems". This project is funded by the European Commission under the ICT-10-2016 topic of the Horizon 2020 programme.

ElasTest Project information is published in the Community Research and Development Information Service (CORDIS).

The elastest.eu web site contains more information about the european project. You can download the official documents describing the project process (deliverables).

ElasTest Partners

The ElasTest project is being developed by a consortium of european academic institutions, research centers, large industrial companies and also SMEs. In the following subsections the partners are described in detail:

ElasTest ambition

Software testing is one of the most complex and less understood areas of software engineering. When creating software, the testing process commonly accounts for the highest fraction of costs, efforts and time-to-market of the whole software development lifecycle. For example, in the testing book of Mili and Tchier it is reported that the creation of Windows Server 2003 required around 2,000 engineers for analysis and development but 2,400 for testing. This situation is getting worse with the emergence of highly distributed and interconnected heterogeneous environments (i.e. Systems in the Large, SiL) and the appropriate testing of SiL is becoming prohibitive for many software and service providers.

This is a deterrent of innovation and generates relevant risks in society given the pervasiveness of software in most human activities. This situation is mainly caused by complexity effects. When a software under test (SuT) grows in N lines of code, the complexity of its tests do not grow proportionally as these new N lines may generate potentially many new interactions. Hence, software grows linearly but its complexity may grow exponentially.

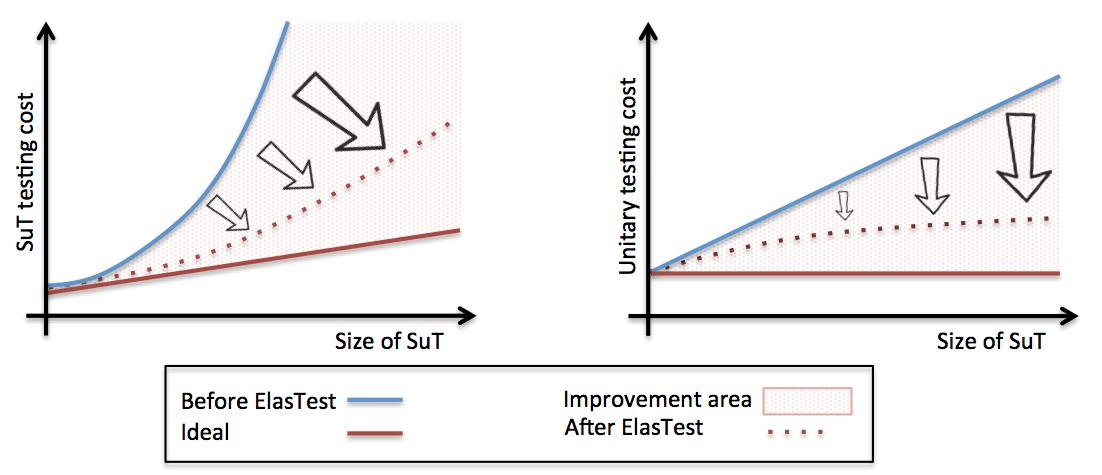

As introduced before, a SiL is an aggregation of systems in the small (SiS). Hence, following the same logic, when integrating a new SiS in a SiL the final testing costs are significantly over the ones of testing independently the comprising SiS. This effect is well known in software engineering and it is illustrated in the next figure.

Our assumption is that SiL grow through the aggregation of SiS that interact following some orchestration rules. Hence, we hypothesize that the appropriate orchestration of T-Jobs should also enable the validation of the interactions among such functions. As a result, in the ideal case (i.e. test orchestration effort being zero) the marginal cost of testing a new added function should be constant (red line on right picture) and the total costs of SiL testing should grow linearly (red line on left picture). In current state of the art (SotA), test orchestration technologies are not popular and are created in ad-hoc manners, reason why the interactions are typically tested manually driving to increasing marginal costs (blue line on right picture) and super-linear total costs (blue on the left).

As it can be observed in the previous figure, in an ideal world in whick SiS do not interact inside a SiL (solid red line), the testing costs might be growing linearly with the size of the SiL (left picture) and the marginal costs of testing would be constant (right picture). However, in reality (solid blue line) the costs of testing grow super-linearly (left picture) as the marginal cost of testing is not constant (right picture) due to the complex interactions within the SiL. In this context, our ambition is to approach as much as possible to that ideal situation basing on ElasTest ideas.

ElasTest ambition is to evolve SotA creating novel test orchestration technologies and to get as close as possible to such ideal.

ElasTest progresses beyond the state of the art

ElasTest will provide relevant advances beyond SotA both from a scientific and from an innovation perspective. The following table summarizes such advances.

| Competences | Current SotA | Expected SotA evolutions |

|---|---|---|

| Test orchestration |

No notion of orchestration Limited methods or tools for testing SiL Strong assumptions on tests and/or SuT Hard integration with CI tools |

Solid orchestration notation (topology) Systematic Divide-and-conquer testing of SiL No assumptions on tests or SuT Ready to be used with CI tools |

| Non-functional testing |

Non-functional testing is a challenge Poor tools and methodologies Ad-hoc non-reusable code required No tests for costs or energy No tools for multimedia QoE testing No tools for IoT QoS testing |

Non-functional testing is a feature Powerful tool and methodology Reusable orchestration logic Ready for costs and energy testing Ready for QoE multimedia testing Ready for QoS testing in IoT environments |

| Security testing |

Simple client/server configuration testing Specific to a vulnerability of application Limited protocol semantics Crash oracle |

Support for multiple entities Generic Security Check as a Service framework Fine-grained protocol grammar language Fine-grained oracles |

| GUI automation and impersonation |

GUI user impersonation Poor tools for code re-usability |

User + sensor + device impersonation Reusable SaaS test support services |

| Monitoring and Runtime verification |

Methodologies and tools for testing SiS Monitors are monolithic No mechanism for monitor coordination No mechanism for large fragmented logs |

Methodologies and tools for testing SiL Full orchestration of monitors Language for monitor orchestration Algorithms for large and fragmented logs |

| Machine Learning applications to testing |

Focus on automating processes Work on lab and controlled environments |

Focus on supporting the tester in:

Work on real-world SiL |

| Cognitive Q&A Systems |

Powerful tool for knowledge reusability, but never applied to software testing |

Q&A for designing T-Jobs Q&A for designing orchestration Q&A for test design strategy Full testing knowledge reusability |

| Instrumentation |

Only available in specific clouds May require special permissions Fragmented non-uniform APIs |

Infrastructure-independent capabilities Available for all cloud users Coherent unified and uniform API |

Roadmap

Year 2017

The project takes as inputs the ideas specified in the objectives and a set of input technologies provided by the partners. From them, the consortium executes the research and development activities creating the ElasTest services. There are validated through experimental proofs of concept. During this year the agile cycle shall be limited to stages to define, built and learn. Being research and development the main target of efforts during the first three releases.

| Milestone number | Title | Date | Objectives |

|---|---|---|---|

| MS1 |

First ElasTest Architecture |

April |

First agile cycle (Release 1) executed generating a first |

| MS2 |

First ElasTest artifacts |

August |

Second agile cycle (Release 2) executed generating initial software |

| MS3 |

Early ElasTest Platform |

December |

First version of the ElasTest platform ready and |

Year 2018

The platform is integrated as a whole through a CI methodology and with the support of a CI tool where tests validating the platform in a controlled lab environment are executed. These tests collect requirements coming from four relevant vertical domains (Video transmission, Internet of Things, Software defined networks and Web and Mobile apps). In parallel, research and development activities continue under that CI umbrella. As a result, ElasTest is validated in relevant industrial environments. Hence, the Test stage shall become important through activities providing valuable insight in relation to whether ElasTest is appropriately implemented.

| Milestone number | Title | Date | Objectives |

|---|---|---|---|

| MS4 |

ElasTest CI system |

April |

ElasTest CI system fully qualified and in production |

| MS5 |

Mature ElasTest Platform |

December |

Mature implementation of ElasTest validated in laboratory |

Year 2019

In addition to the research and development and the integration/validation activities, during this phase a demonstration activity in launched on four vertical domains. This demonstration follows a rigorously defined methodology using empirical surveys, comparative case studies and (quasi)-experiments. As a result, the most mature ElasTest results are demonstrated in relevant industrial environments. Hence, the Experiment and Measure stages will be activated through the vertical demonstrators. During this period, the demonstration activities will gain maximum relevance for the project.

| Milestone number | Title | Date | Objectives |

|---|---|---|---|

| MS6 |

ElasTest fully qualified |

December |

Fully qualified ElasTest platform validated through demonstrators, as specified in WP7, |

Advisory Board

The mission of the Advisory Board is to reinforce the activities of the project by introducing the perspective of external experts. These experts come from external organizations. The Advisory Board will not require any kind of formal meeting, but its members may be appointed individually or in groups for providing advice and relevant information related to the strategic aspects of the project, for mediating on conflicts, for assessing risks and for analyzing performance. What follows is a list of members of ElasTest’s Advisory Board.

Shing-Chi Cheung

Shing-Chi Cheung is a professor of Computer Science and Engineering at the Hong Kong University of Science and Technology (HKUST). He holds a PhD degree in Computing from the Imperial College London. He founded the CASTLE research group at HKUST and co-founded in 2006 the International Workshop on Automation of Software Testing (AST). He serves on the editorial board of Science of Computer Programming (SCP) and Journal of Computer Science and Technology (JCST). He participates actively in the program and organizing committees of major international software engineering conferences. His research interests lie in the software engineering methodologies that boost developer productivity and code quality using program analysis, testing and debugging, machine learning, crowdsourcing and open source software repository. He is a distinguished scientist of the ACM and a fellow of the British Computer Society.

Stefano Russo

Stefano Russo, PhD, graduated in Electronic Engineering in 1988 at the Federico II University of Naples (UNINA), Italy. He was Software engineer at Alfa Romeo Avio SpA, an aircraft manufacturing company near Naples from 1989 to 1990. He became Ph.D. in 1993, and he is Professor at UNINA since 2002, where he teaches Software Engineering and Distributed Systems. He is currently at the Department of Electrical Engineering and Information Technology (DIETI), where he leads the DEpendable Systems and Software Engineering Research Team (DESSERT). In 2011 he co-founded the spin off company Critiware s.r.l., and in 2013 he co-founded the Italian Association for Software Engineering (AIIS). Since 2013 he is IEEE Senior Member and Associate Editor of IEEE Transactions on Services Computing. Stefano has (co-)authored more than 160 scientific publications and supervised 14 PhD thesis. His research activities in +20 years cover the following areas of software engineering, software dependability, software testing, software aging, cloud and service computing, middleware services and technologies, service oriented architectures, and distributed and mobile computing.

Jose Lausuch

Jose Lausuch is Senior Software Developer at Suse (Nüremberg, Germany) with experience on Cloud infrastructure and Network Functions Virtualization. He is engaged in OPNFV reference platform and currently leading the Functest project (Functional Testing in OPNFV). His main responsibilities within Suse includes Quality Assurance of the networking and NFV stack and other activities around testing using open source tools and contributing upstream.

Eric Knauss

Eric Knauss is Associate Professor at the Department of Computer Science and Engineering, Chalmers | University of Gothenburg, where he has established Sweden’s largest requirements engineering research group. His research focuses on managing requirements and related knowledge in large- scale, distributed software development, including Requirements Engineering, Software Ecosystems, Global Software Development, and Agile Methods. He has co-authored more than 50 publications in journals and international conferences, has been on organization and program committees of top conferences in the field (incl. ICSE and RE), and is a reviewer for top journals (incl. TSE, IST, JSS, IEEE SW). In national and international research projects, he collaborates with companies such as Bosch, Ericsson, Grundfos, GM, IBM, Siemens, TetraPak, and Volvo.

José Turégano

José Turégano is responsible for Quality in the Center of Excellence in SQA & Testing in Panel Sistemas Informaticos. He has 7 years of experience as head of the area of internal corporate development, creating business concepts such as "ecological manufacturing", and he is responsible for Devops and Agile / Lean activities. He is primarily focused on the development of the Zahori Test Automation Software. Panel Sistemas is a technological company specialized in software development, quality assurance, and value-added IT Outsourcing services. The company was created in 2003 and, since then, it’s growing above the IT services sector average in Spain. In 2016 it invoiced 20 million euro of turnover and today Panel Sistemas includes a team of more than 400 professionals. More than 50 leading companies endorse their work in different activity sectors (Banking, Telco, Industry, Transportation) and more than 1,200 software projects have been developed at the company at national and international level. Panel Sistemas has two Centers of Excellence, specialized in Software Development and Software Quality Assurance (SQA). Both Centers work in an integrated way under a working model, which they call ecoLOGICAL software manufacturing, that uses sustainable and profitable processes, based on people, for the creation of software, systems and services. Their goal is to ensure Quality and deliver satisfactorily the Value that our customers expect.

Maaret Pyhäjärvi

Maaret Pyhäjärvi is a software professional with testing emphasis. She identifies as an empirical technologist, a tester and a programmer, a catalyst for improvement and a speaker. Her day job is working with a software product development team as a hands-on testing specialist. On the side, she teaches exploratory testing and makes a point of adding new, relevant feedback for test-automation heavy projects through skilled exploratory testing. In addition to being a tester and a teacher, she is a serial volunteer for different non-profits driving forward the state of software development. She was recently awarded as Most Influential Agile Testing Professional Person 2016. She blogs regularly at http://visible-quality.blogspot.fi and is the author of Mob Programming Guidebook.